Lecture 5: Sequence Models

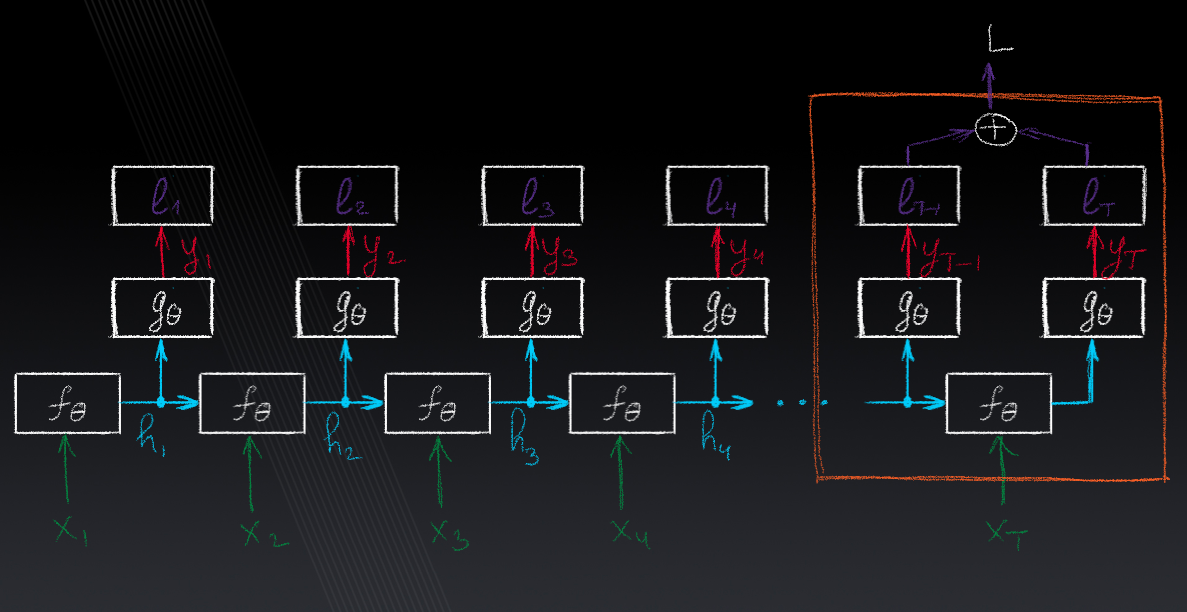

RNN model, input-output sequences relationships, non-sequential input, layered RNN, backpropagation through time, word embeddings, attention, transformers.

Slides

- Part I: RNNs

- Part II: Attention and Transformers

Videos

- Part I

- Part II: Word embeddings

Lecture Notes

Accompanying notes for this lecture can be found here.